MapReduce Using Apache Spark

In the world of big data, processing vast amounts of information efficiently is crucial. MapReduce, a programming model introduced by Google, revolutionized how we handle large datasets. Apache Spark, a powerful data processing engine, takes this concept to the next level by providing an easy-to-use interface and remarkable performance.

In this article, we will explore how to implement MapReduce using Apache Spark, diving into its core concepts, benefits, and practical applications. Whether you’re a data scientist, developer, or just someone curious about big data, understanding MapReduce with Spark can significantly enhance your skill set. Let’s embark on this journey to uncover the power of MapReduce in the Apache Spark ecosystem.

Understanding MapReduce

MapReduce is a programming model that allows for the processing of large data sets with a distributed algorithm. The model consists of two main functions: the Map function, which processes input data and generates key-value pairs, and the Reduce function, which processes those key-value pairs to produce a smaller set of output data. This paradigm is particularly useful for tasks like sorting, filtering, and aggregating data.

Apache Spark enhances the traditional MapReduce model by providing in-memory processing capabilities, which drastically improves performance. Unlike Hadoop MapReduce, which writes intermediate results to disk, Spark keeps data in memory, allowing for faster computations. This makes Spark an ideal choice for iterative algorithms, such as those used in machine learning and graph processing.

Setting Up Apache Spark

To get started with MapReduce in Apache Spark, you need to set up your Spark environment. You can do this either locally or on a cluster. For local setup, download Apache Spark from the official website and follow the installation instructions. If you’re using a cluster, ensure that Spark is properly installed on all nodes.

Once your setup is complete, you can run Spark applications using the Spark shell or submit jobs using the spark-submit command. This flexibility allows you to develop and test your applications quickly.

Implementing MapReduce with Spark

Word Count Example

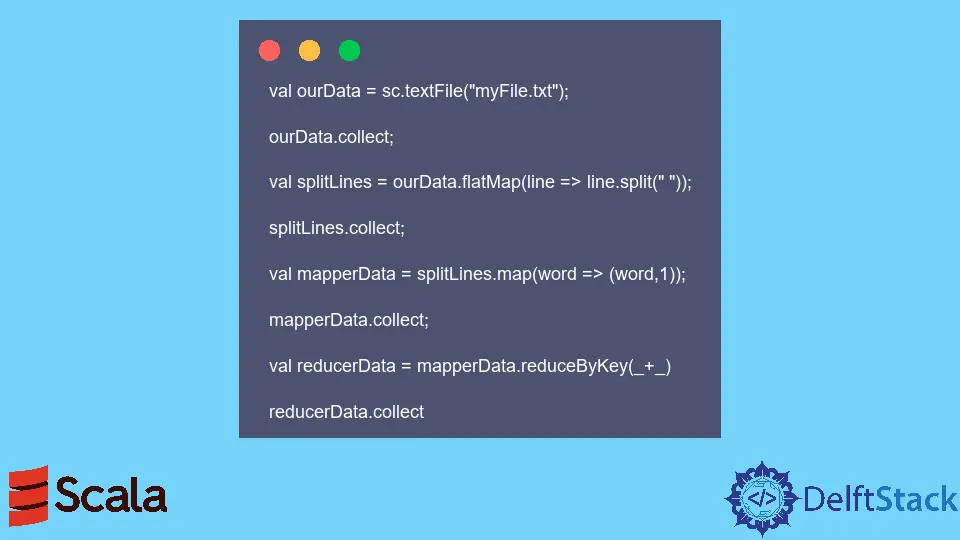

One of the most common examples to illustrate MapReduce is the word count problem. In this example, we will count the occurrences of each word in a given text file. Here’s how you can implement this using Apache Spark.

from pyspark import SparkContext

sc = SparkContext("local", "Word Count")

text_file = sc.textFile("path/to/textfile.txt")

word_counts = text_file.flatMap(lambda line: line.split(" ")) \

.map(lambda word: (word, 1)) \

.reduceByKey(lambda a, b: a + b)

word_counts.saveAsTextFile("path/to/output")

Output:

(word1, count1)

(word2, count2)

...

In this code, we first initialize a Spark context. We then read a text file into an RDD (Resilient Distributed Dataset). The flatMap function splits each line into words, and the map function creates a tuple of each word with an initial count of 1. Finally, reduceByKey aggregates the counts for each word. The results are saved to an output directory.

Finding Maximum Value

Another common use case for MapReduce is finding the maximum value in a dataset. This can be efficiently achieved using Spark as well.

from pyspark import SparkContext

sc = SparkContext("local", "Max Value")

data = sc.textFile("path/to/numbers.txt")

max_value = data.map(lambda x: int(x)).max()

print(max_value)

Output:

max_value

In this example, we start by creating a Spark context and reading a file containing numbers. We then convert each line to an integer and use the max function to find the maximum value in the dataset. This approach is straightforward and leverages Spark’s ability to handle large datasets efficiently.

Data Aggregation

Data aggregation is another powerful feature of MapReduce in Spark. Let’s say we want to calculate the average of a set of numbers grouped by a specific key. Here’s how we can do it.

from pyspark import SparkContext

sc = SparkContext("local", "Average Calculation")

data = sc.textFile("path/to/data.txt")

pairs = data.map(lambda line: line.split(",")) \

.map(lambda x: (x[0], (float(x[1]), 1)))

aggregated = pairs.reduceByKey(lambda a, b: (a[0] + b[0], a[1] + b[1]))

averages = aggregated.mapValues(lambda x: x[0] / x[1])

averages.saveAsTextFile("path/to/output")

Output:

(key1, average1)

(key2, average2)

...

In this code snippet, we read a CSV file where each line contains a key and a numeric value. We create pairs of (key, (value, count)) and then use reduceByKey to aggregate the sums and counts. Finally, we calculate the averages and save the results. This method showcases how Spark can efficiently handle complex data transformations and aggregations.

Conclusion

MapReduce using Apache Spark is a powerful approach to processing large datasets efficiently. With its in-memory capabilities and easy-to-use APIs, Spark allows developers to implement complex data processing tasks with relative ease. Whether you’re tackling word counts, finding maximum values, or performing data aggregations, Spark’s flexibility and performance make it an outstanding choice for big data applications. By mastering these concepts, you can significantly enhance your data processing skills and contribute to your organization’s data-driven decision-making processes.

FAQ

-

What is MapReduce?

MapReduce is a programming model for processing large data sets with a distributed algorithm, consisting of two main functions: Map and Reduce. -

How does Apache Spark improve upon traditional MapReduce?

Apache Spark improves upon traditional MapReduce by providing in-memory processing, which significantly speeds up data computations compared to writing intermediate results to disk. -

Can I use Python with Apache Spark?

Yes, Apache Spark supports Python through the PySpark library, enabling developers to write Spark applications in Python. -

What are some common use cases for MapReduce in Spark?

Common use cases include word count, data aggregation, and filtering large datasets, among others. -

How do I set up Apache Spark on my local machine?

You can download Apache Spark from the official website and follow the installation instructions to set it up locally.