How to Tokenize a String in JavaScript

- Understanding Tokenization in JavaScript

-

Method 1: Using the

split()Method - Method 2: Using Regular Expressions

- Method 3: Using the Lodash Library

- Conclusion

- FAQ

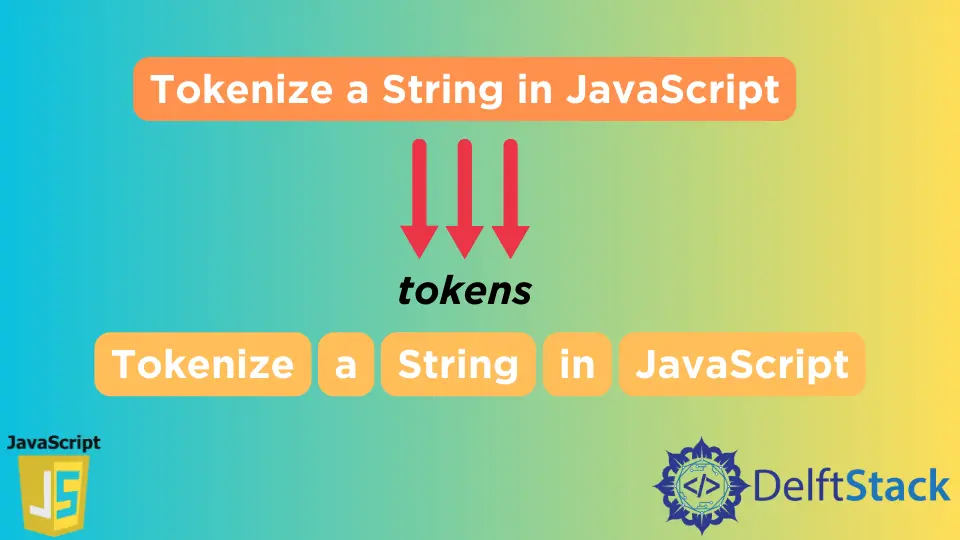

Tokenization in JavaScript is a fundamental concept that defines how we can parse and manipulate strings. As developers, we often encounter situations where we need to break down a large string into smaller, manageable pieces or tokens. This process is crucial for various applications, including data processing, natural language processing, and even in game development.

In this article, we’ll explore different methods to tokenize a string in JavaScript, providing you with practical code examples and detailed explanations. Whether you’re a beginner or an experienced developer, this guide will enhance your understanding of string manipulation in JavaScript.

Understanding Tokenization in JavaScript

Tokenization is the process of splitting a string into smaller segments, called tokens. This can be done using various delimiters like spaces, commas, or custom characters. In JavaScript, there are several methods available to achieve this. The most common approaches include using the split() method, regular expressions, and libraries like Lodash. Each method has its own advantages and use cases.

Method 1: Using the split() Method

One of the simplest ways to tokenize a string in JavaScript is by using the built-in split() method. This method divides a string into an array of substrings based on a specified delimiter.

const str = "Tokenization, in JavaScript, is essential.";

const tokens = str.split(" ");

console.log(tokens);

Output:

[ 'Tokenization,', 'in', 'JavaScript,', 'is', 'essential.' ]

The split() method takes a string as an argument, which acts as the delimiter. In the example above, we used a space as the delimiter, allowing us to break the string into individual words. The resulting array contains each word as a separate element.

While split() is straightforward, it has limitations. For instance, if you want to split a string by multiple delimiters, you’ll need to use regular expressions. Additionally, split() does not remove punctuation, so tokens may still contain unwanted characters.

Method 2: Using Regular Expressions

Regular expressions (regex) offer a more flexible way to tokenize strings. They allow you to define complex patterns and split strings based on those patterns.

const str = "Tokenization, in JavaScript; is essential.";

const tokens = str.split(/[\s,;]+/);

console.log(tokens);

Output:

[ 'Tokenization', 'in', 'JavaScript', 'is', 'essential.' ]

In this example, we used a regex pattern to split the string. The pattern /[\s,;]+/ matches any whitespace character (\s), comma, or semicolon. The + quantifier ensures that consecutive delimiters are treated as a single delimiter. This method not only splits the string into tokens but also removes punctuation, resulting in cleaner output.

Regular expressions can be complex, but they provide powerful capabilities for string manipulation. By mastering regex, you can handle various tokenization scenarios effectively.

Method 3: Using the Lodash Library

If you’re working on a larger project, using a utility library like Lodash can simplify the tokenization process. Lodash provides a method called _.words() that splits a string into an array of words, handling punctuation and whitespace.

// Assuming Lodash is included in your project

const _ = require('lodash');

const str = "Tokenization, in JavaScript is essential.";

const tokens = _.words(str);

console.log(tokens);

Output:

[ 'Tokenization', 'in', 'JavaScript', 'is', 'essential' ]

The _.words() method intelligently splits the string into words, automatically removing punctuation and extra spaces. This makes it an excellent choice for situations where you need a quick and efficient tokenization solution without worrying about regex patterns.

Using Lodash can enhance code readability and reduce the likelihood of errors, especially in larger applications where string manipulation is common.

Conclusion

Tokenization is an essential skill for any JavaScript developer. Whether you choose to use the simple split() method, the power of regular expressions, or a utility library like Lodash, understanding how to tokenize strings will improve your ability to manipulate and analyze text data. By applying these methods in your projects, you can handle string parsing efficiently and effectively.

FAQ

-

What is tokenization in JavaScript?

Tokenization is the process of splitting a string into smaller segments or tokens based on specified delimiters. -

What is the simplest method to tokenize a string?

The simplest method is using thesplit()method, which divides a string into an array of substrings based on a specified delimiter. -

How can I remove punctuation while tokenizing?

You can use regular expressions with thesplit()method to remove punctuation while tokenizing the string.

-

What is Lodash, and how does it help with tokenization?

Lodash is a popular utility library that provides various functions for common programming tasks, including the_.words()method for easy string tokenization. -

Can I tokenize a string by multiple delimiters?

Yes, you can use regular expressions to specify multiple delimiters when tokenizing a string.